Recently we ran the second trial of our AccessLab project. We are iteratively developing a workshop format that works across a wide range of audiences, helping people to access and use scientific information.

AccessLabs currently consist of two workshops – one short workshop to prepare the science researchers, followed by a full day workshop where we bring together science researchers with a chosen audience. In the full day workshop we cover the basics of finding and judging scientific information, and then pair the participants (one researcher with each non-researcher) to co-research a topic of interest to the non-researcher. You can read the write up from our first pilot event here.

In this workshop FoAM was joined by our collaborator Ivvet Modinou - the Head of Engagement from the British Science Association, and Dr. Lotty Brand from the University of Exeter – Lotty was a previous AccessLab participant and came to watch over the workshop, contribute to the content, and give additional feedback. This post covers some of the changes we made to the format, what worked and what didn’t, the feedback received, and what we’d like to change next time.

Individual task – what sources of information do you use?

In the preparatory workshop, the science researchers were asked to list the sources of information they used to decide which way to vote during the EU referendum. Each source was written on a separate piece of paper, and placed along a trust scale. As last time, sources of information that people knew they did not trust were routinely used, friends and family featured heavily, and there was little use of primary information (e.g. peer-reviewed economics/politics research did not feature at all). The science researchers used a mean of 5.4 sources of information each for this task (in our previous workshop pairing science researchers with artists, the researchers used 4.6 sources on average for the same task).

In the main workshop, we asked everyone to list the sources of information they would use to find out what contributes most to the problem of marine plastic pollution, so that they could decide what they could do about it. The results were remarkably similar to our previous workshop where we asked where people would gather information if they had been diagnosed with leprosy. Now that they were within their comfort zones, science researchers commonly listed peer-reviewed scientific research, while the councillors/community group leaders mostly listed secondary sources like conservation charities. As previously, friends/family/colleagues appeared in both groups, but it was clear that the researchers had access to more people with knowledge in the specific field of marine research. On average, science researchers used 5.1 sources of information each, while the councillors/community group leaders used 3.9 (In the previous workshop, these averages were 4.5:3.0).

It seems that consistently the science researchers are using more sources of information to answer questions, regardless of whether those questions relate to their specialist topic. When they are asked a question relating to science, as expected they have knowledge of and access to specialist information and contacts in the field. We believe that this disparity (which is a form of knowledge inequality) can be addressed through an approach like AccessLab.

General introduction

Our focus for the introduction is primarily: ‘science is useful, we should all be able to access it’, ‘this is where/how science is published’, and ‘science publishing/accessibility has gone horribly awry (by accident/history) and that’s what we’re trying to fix now, scientists and non-scientists alike’.

The slides from the talks are available openly here.

This time we included a new section on ‘what is a journal’ and ‘what is peer review’, based on feedback from the previous pilot. This was written and presented by Dr. Lotty Brand who participated in the previous pilot. We received feedback that this was very useful – but also that it could make science seem unreliable. On balance this is a realistic portrayal, however it may be worth simplifying this section a little so participants don’t get overwhelmed by all the potential problems. We could also replace some of this with simple information on which parts of a paper to focus on - i.e. the abstract and discussion could be read first, followed by the introduction, and then possibly methods and results last if they want to know more and it seems accessible. If the abstract mentions technical words that don’t make sense, it’s worth checking the introduction to see if those terms are explained. We should also be sure to mention in this section the difference between experimental papers and review papers – and suggest using preferentially using review papers as they discuss lots of previous studies and are often less technical/detailed.

We received very positive feedback for covering the history of scientific publishing, and how we’ve ended up in a situation where research is so inaccessible – this was new for several of the researchers as well as the councillors/community group leaders, and was simplified from the previous pilot.

Some common questions that came up that we could explicitly cover in an activity (which we did in the last iteration of the workshop, and may be worth bringing back in):

-Who funds the research? (tax, industry, charities/trusts etc.)

-How do funding bodies decide what to fund?

-How do scientists decide what to research?

Fact checking case studies

In our previous pilot we received good feedback for a session where we took a media paper and traced it back to the original research, talking about how it ended up as a news article, and some simple approaches to judging the reliability of the research.

This time, after the case study, we put people in pairs (one researcher with one non-researcher) and handed out a different science related media article to each pair. They then had some time to fact check the article together, and tell everyone what they found. Based on the feedback, this was very well received, and it is something that we will keep doing in future sessions.

There was some push back from researchers with respect to the simplified approaches to judging the reliability of research – particularly regarding our suggestion that larger sample sizes could usually be considered more reliable. There is a delicate balance here – we need to present simple approaches that people with no science training can use, but science researchers are trained to always think of the caveats so can be reluctant to embrace any simplification. The same information was presented at the first pilot, with no push back. One option to consider is making a bigger gap between the two workshops to allow content generation during the preparatory workshop that is then used in the main workshop – this would help ensure all participants were on board. Another option is to shift the focus to replicability – larger sample sizes can be indicative that research is likely to be more replicable, but it is not that simple, so instead (or as well) we could talk about checking whether other people have found a similar result. This also opens up the opportunity to talk about how, by definition, only new stuff is considered news by journalists - and how this is in direct contradiction with the way science works where new findings aren’t trusted until they’ve been replicated.

Co-research session

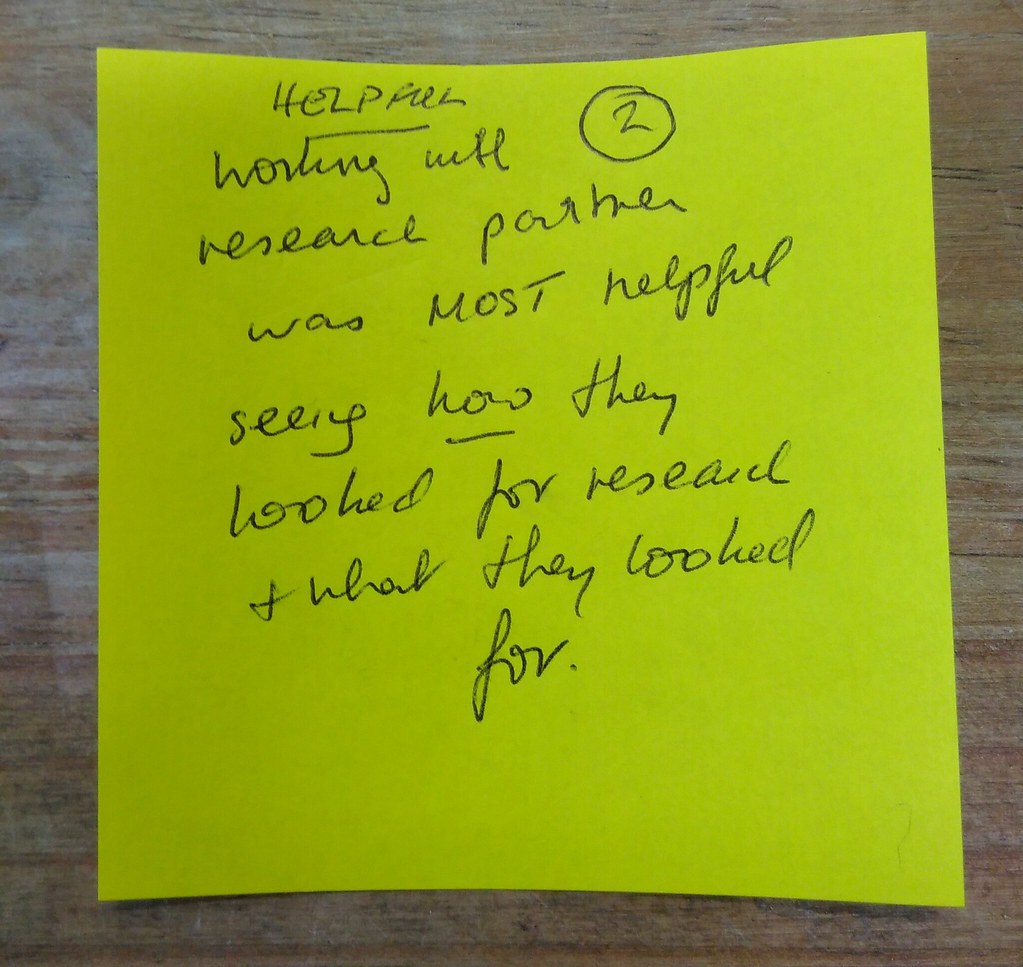

In the first pilot we allowed 1.5h for unstructured time to research a topic together – this is the culmination of the day and should leave the non-researcher participants with tangible outputs that are of use for their own lives and work. Last time we had feedback that this was too short, and that people spent much of the time honing the questions to make it possible to perform research. This time we made sure the researchers knew about this issue in advance, and extended the session to 2h.

In the feedback, only one person remarked that 2h was too long, and some said it was still too short. Several of the researchers commented that it was very hard to hone down the questions – this may reflect the fact that our researchers were more junior than last time, but we also felt that the questions were less well defined from this participant group than they had been from the artists so it may reflect different working practices. Regardless, the act of trying to hone questions is a valuable experience for both parties.

A few people said that they would like more structure to this session or even some pre-defined outputs. Next time we run the session we may explicitly split the session into two parts – firstly to hone the question (40 mins) and secondly to do the research (1 hour). After honing the question it may be helpful for each pair to explain to the group what the question was at the start, and what the final question to be researched was, so that they better understand how to formulate questions for research. This would also be useful information for us - so far we’ve said that the research time is just for them, and that we don’t need to know what they do in this time - but it seems probable that this confidentiality isn’t necessary. Another option is to do more work honing the questions with the participants over email before they arrive to the workshop – our feeling is that this might not work well as email contact is very patchy with many participants, so we try to keep emails to a minimum – face to face may work better.

General points

The first pilot was aimed at artists – when recruiting artist participants we made sure to check that none of them had a background in science. However, this time when recruiting local councillors/community group leaders we didn’t ask about their backgrounds. We ended up with some participants who already knew how to do scientific research, which defeats the object of the workshops. For future workshops we need to specify that the non-research group has no background in science.

The emphasis on having good food and an informal location was once again noticed by many participants – we should continue to place great effort here as it affects the way people behave. For rolling out the workshops further afield we need to think about what is possible for others to recreate – we may suggest recruiting a local chef who wants to gain exposure, and specify details about what makes a suitable venue (e.g. nothing linked to formal education like a university, school, library, and no religious buildings that might make some feel uncomfortable – use of items that are not traditionally associated with work/education and make people feel more at ease, such as simple soft furnishings, plants, non-corporate and non-disposable cutlery/crockery/working materials).

One participant mentioned that a shorter day might help allow a greater diversity of people to come – in future sessions we need to think about what could be dropped as we gradually tighten up the format.

The balance between positive and negative messages in the general introduction is a delicate balance – both need to be included. Some researchers stated in their feedback that they felt the message was a little negative – so we may need to tip the balance slightly here. We talk about the problems of accessing scientific publishing, and the difficulties faced when trying to make use of scientific research – but we can highlight more about why it’s worth accessing.

There were some issues with the venue – the space was a little limiting as we did not have access to enough tables. It was not possible to put the heating on at the same time as the projector/computers without blowing the power – so it was a bit cold – and the food preparation had to be relocated from the studio to the chef’s home at the last minute to avoid power cuts. In future we need to check whether the wiring of the venues is adequate for our needs (other things checked include strong open wifi, adequate number of power sockets, ability to cater independently, access to tables/chairs, access times, ability to use walls to put posters up, access to water/sink/kettles, overall space for the number of people, accessibility of the venue for wheelchairs, ease of travel to the venue, avoiding paid-for-parking as this adds cost for participants).

We could add more time for people to meet each other informally, and ask participants if they are happy to share their contact details with each other. One participant asked for big name plates so people could see who each other was across the table. We are already aware that some participants are keeping in touch after the workshop. One community group participant contacted us to say that as a result of the workshop she is now keeping contact with four researchers, is able to do her work better as she now knows where to find evidence to inform it, and is running an event for British Science Week. We need to follow up all participants in 1-2 months to see if there have been any longer term outcomes.

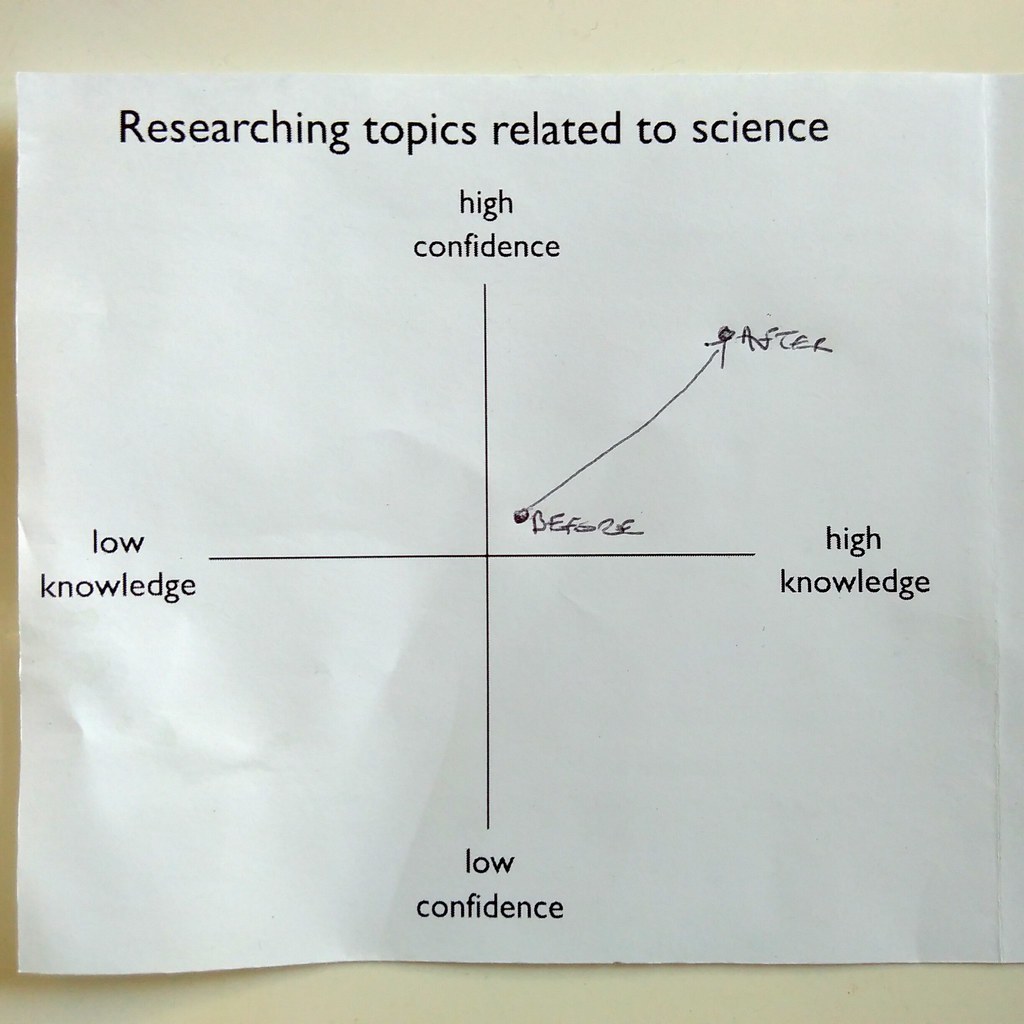

We handed out charts for people to mark off where they were in terms of knowledge/confidence researching topics relating to science at the start and end of the workshop. All councillors/community group members reported that they had increased both their knowledge and confidence through the workshop (6/6 charts received back) – this was our main aim, so it is reassuring. Out of interest, we asked science researcher participants the same question - 2 reported no change, 2 reported an increase in knowledge but not confidence, 1 reported an increase in confidence but not knowledge, 1 reported a decrease in both knowledge and confidence (perhaps a re-assessment of their relative level took place during the workshop), and 1 reported an increase in knowledge but a decrease in confidence. We also handed out charts for people to mark off where they were in terms of knowledge/confidence with communicating with people from outside their specialism. Of the science researcher participants, 6 reported an increase in both knowledge and confidence, and 1 reported an increase in knowledge but a decrease in confidence. This result is reassuring as improving communication with those outside academia was a secondary aim for the workshop. Of the councillors/community group members, 3 reported an increase in both knowledge and confidence (this was an unexpected bonus from our perspective), and 3 reported no change. On the whole, the feedback indicates that this model is succeeding in its aims. In future we should not use dual scales for feedback however, as this confused some participants.

Future plans

We are currently seeking funding to run a further set of workshops in the South West in 2018 in partnership with the British Science Association. If successful, these will be advertised via the FoAM Kernow mailing list (subscribe here). If you are interested in participating or helping to run AccessLab, do get in touch (kernow@fo.am).

More photos from the event are available here.