We have two projects where we’re building tangible interfaces – Penelope and Viruscraft. This blog is an exploration of tangible interfaces, harvesting thoughts from the worlds of neuroscience, choreograhy, cognitive science and education to see what we can learn to guide our research and design directions.

First, a quick introduction to the projects:

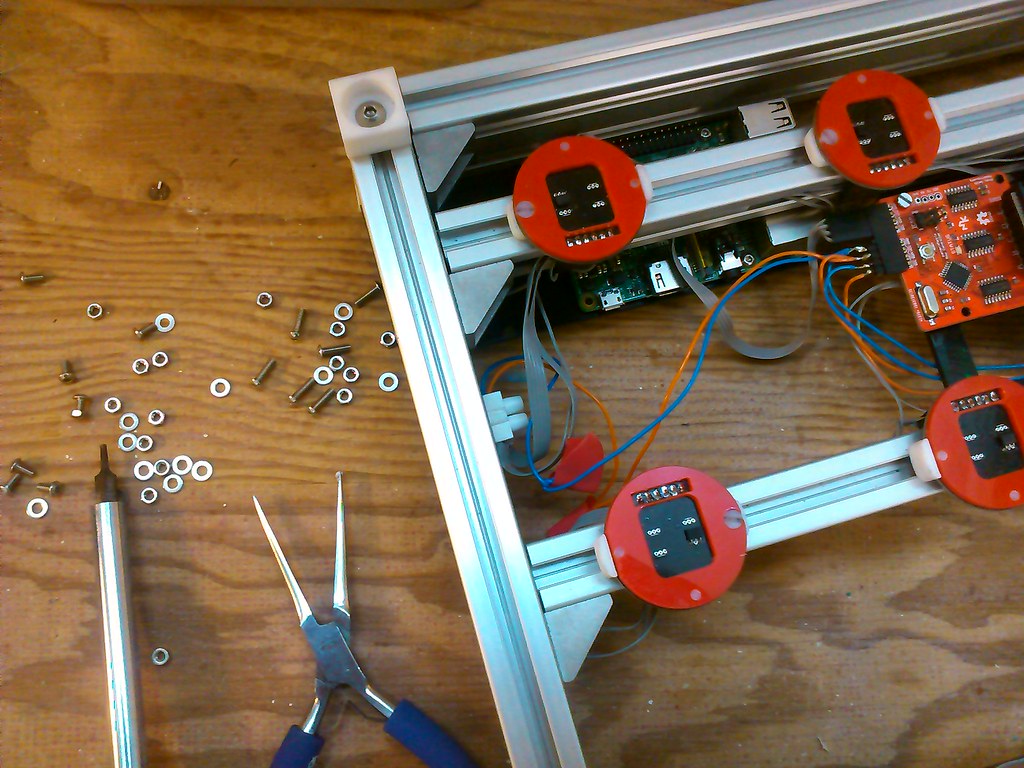

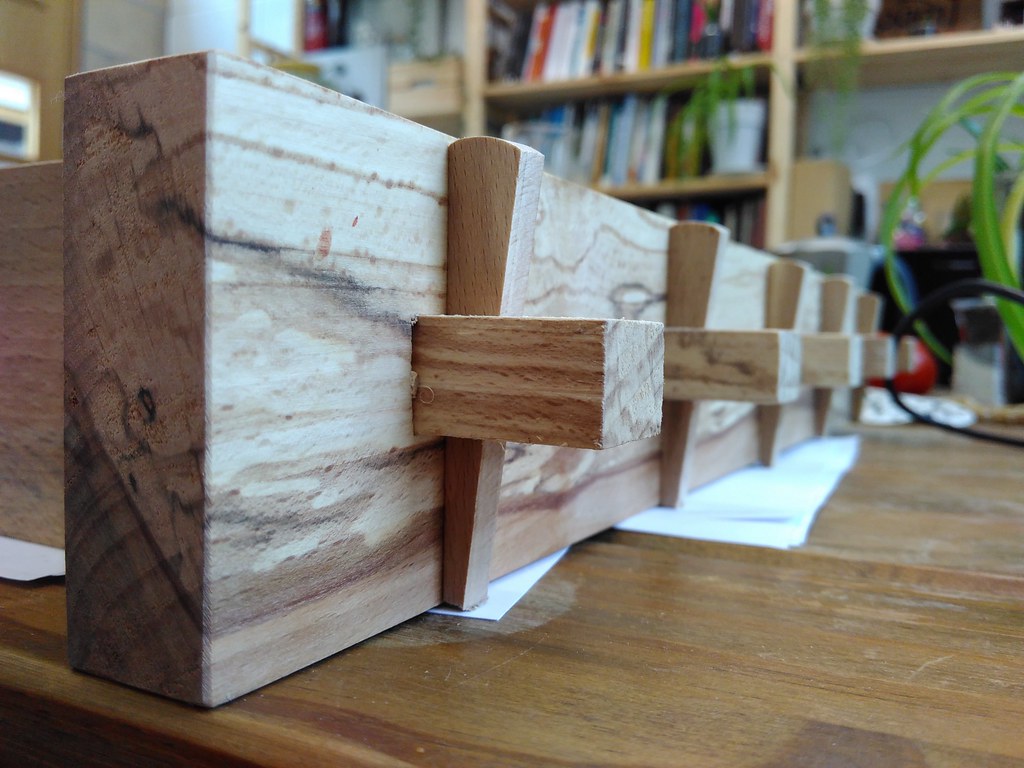

Penelope is all about weaving and coding – our everyday computers were originally built from textile technology, and replicate the pattern maniputating circuitry. Looms are tangible interfaces, but it takes a long time to see the results – we’re speeding this up by making a new interface which can be manipulated quickly and easily by hand, with the resulting patterns animated straight away. The first version is working, and now we’re making improvements to the design, which involves learning carpentry from scratch.

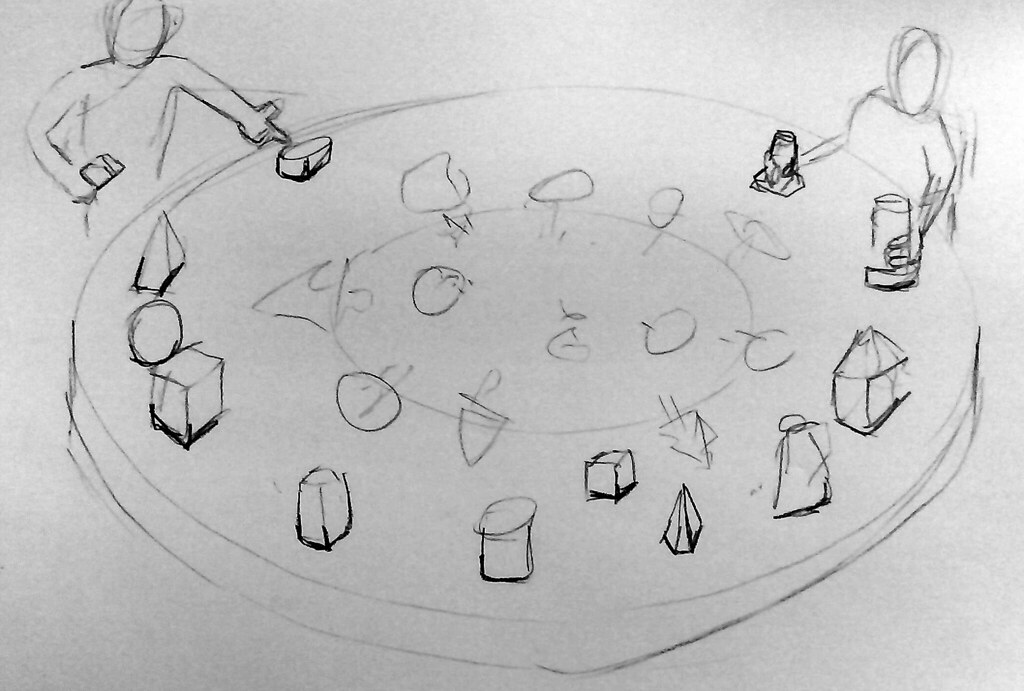

Viruscraft is all about understanding how viruses evolve to jump between different host species – like the bird flu virus suddenly being able to infect humans. Virus structure is quite geometrical, and it is the lock-and key structural fit with the host’s cells that either allow a virus to infect or not. The evolution of this structure is something that we are going make explorable through building a tangible interface.

As people designing and building the systems, there’s a clear switch from screen-based work to sketching, carpentry, soldering and wiring up the electronics. We’ve noticed that we enter a state of ‘flow’ more easily, and feel rather more fulfilled after a day performing complex manipulations physical objects with our hands.

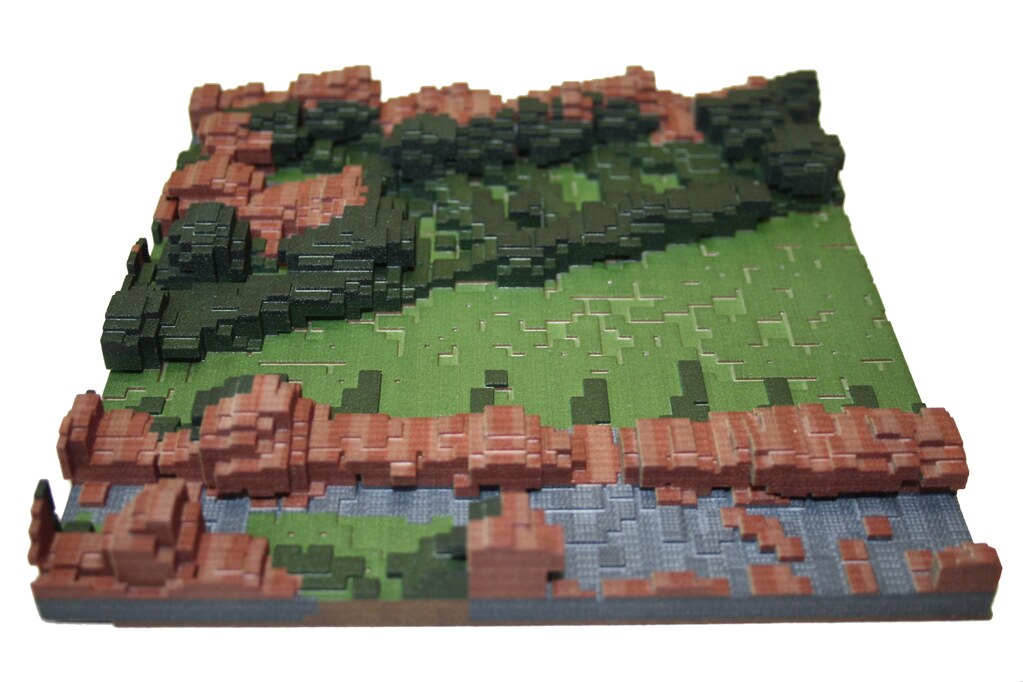

This mimics the reason we’re taking a tangible interface approach for these projects – a feeling (that’s an interesting term in itself?) that screen-based interfaces limit human modes of interacting with information and ideas, and also reduce opportunities for collaborations. We often work with scientists who are struggling to understand vast amounts data, and with some regularity we hear them say that they want to ‘get a feel for the data’ or ‘get a handle on the data’. We’ve begun exploring this through our GreenSpace Voxels project, making tangible models of cities from LiDAR data (which is made up of voxel data - voxels are just volumetric pixels). Greenspace can be separated from buildings and visualised separately in tangible 3D forms - but we’re eager to push the approach much further.

As another motivation, we’ve found when working in a nursing home with a tangible interface that since they don’t look like a computer they fail to trigger a bunch of negative reactions from people who associated them with bad design (e.g. small buttons and screens that are unusable if you have poor eyesight).

Beyond painted bits behind glass

The vast majority of current interface technology is what Bret Victor refers to as ‘Pictures Behind Glass’. Take out your favourite device and try using it…

‘What did you feel? Did it feel glassy? Did it have no connection whatsoever with the task you were performing? With an entire body at your command, do you seriously think the Future Of Interaction should be a single finger?’

The MiT Tangible Media Group refer to this type of technology as ‘Painted Bits’, criticising graphical user interfaces based on intangible pixels that take little advantage of our abilities to sense and manipulate the physical world.

Another common criticism of screen-based technology is that it does not work well for collaborations between people who are in the same physical space. Some agricultural researchers we work with recently experienced the use of a very basic three dimensional model of a water catchment area during a parliamentary decision-making setting on farm water management. They noticed that the policy makers moved around the 3D map, shifting their perspectives and objects within the model to see the effects – they worked and moved together, exploring the information fully from different angles.

Although our current internet-enabled technology has without a doubt dramatically improved our abilities to collaborate over long distances, screen-based graphical user interface (GUI) approaches should really only be considered the very start of this process.

There are some great examples of tangible user interface designs – an early favourite (2006) is the Reactable for making music through manipulating symbol-covered-blocks on a table rigged with an overhead camera and projection. Similarly, Tangible Pixels (2011) are essentially moveable pixels/voxels rigged with sensors and feedback in the form of light/colour. Promising dynamic shape display technology from the Tangible Media Group at MiT gives a hint at how much further we could push TUI technology – it’s worth taking a look at the inFORM video (2013). But, they also point out that:

‘TUIs have limited ability to change the form or properties of physical objects in real time. This constraint can make the physical state of TUIs inconsistent with the underlying digital models.’

The group has since moved some way towards a vision of computationally reconfigurable materials, for example the rather appealing pneumatic tangible interface PneUI (2014), and exploring the development of more dynamic changes of physical forms. Programmable self-assembly robotics is a step along this way, for example the Thousand Robot Swarm from Harvard University in 2014 and various projects from the MiT Self Assembly Lab – once in the realm of nanotechnology it is easy to imagine dynamic shape interfaces becoming a useful reality.

It’s worth considering the hype of Virtual Reality in this context, as it is essentially a screen strapped to your face, making little of our wealth of physical senses. VR has been described by a friend as ‘a lot of expensive technology that gets rid of your body’, since the body the main thing you don't experience.

For now, within the limitations of not being MiT, we’re focusing on how we can build low-cost tangible interfaces that could be made quickly and widely accessible, and how we can make these so they are flexible across projects rather than being single-purpose.

We’re also interested in how to make causality more transparent. With the growth of data visualisation and a rather relentless, unthinking move towards ‘digital’ modes of exploring data/simulations, often the connection between what the user is changing and what the result of that change is, can be lost – hidden in a black box of code running in the background. Even when the code is made visible, like in live-coding, it usually isn’t straightforward to understand for those without prior experience of programming. There are some great examples of interactive exhibits, which are essentially tangible interfaces – for example orreries beautifully illustrate and predict the movement of planets and moons, and can be moved by hand into different positions until understood from all angles. A screen-based system we’ve enjoyed playing with recently is Loopy, developed by Nicky Case – here’s a simplified example we made using Loopy to create a playable version of the science behind why badger culls don’t work for reducing cattle tuberculosis. It’s not a big jump to imagine this as a very flexible tangible interface that could be used to play with all manner of things – if anyone could deeply explore the badger/cattle simulations, or climate mitigation options, through an interface like this, we might even see positive results for the devlopment of evidence-based policy decisions.

Is tangible better?

The evidence basis for whether a tangible interface is ‘better’ than a graphical interface is rather lacking, but there are a few disparate fields that we can draw from that might give some insight. The closest thing I could think of that might provide some insight is the recent argument over ‘learning styles’. Over the last decade, people have talked more about having different ‘learning styles’ - thinking that some learn better with visual, autidory, lingistic, physical, and other approaches to learning. Indeed teaching practices have been adapted to this idea, with 76% of UK teachers surveyed saying they used ‘learning style’ approaches in the classroom. However, academics from neuroscience, psychology and education are broadly in agreement that the concept of ‘learning styles’ is fundamentally flawed – in a public letter from March 2017 they say:

‘This belief has much intuitive appeal because individuals are better at some things than others and ultimately there may be a brain basis for these differences. There have been systematic studies of the effectiveness of learning styles that have consistently found either no evidence or very weak evidence to support the hypothesis that matching or “meshing” material in the appropriate format to an individual’s learning style is selectively more effective for educational attainment. Students will improve if they think about how they learn but not because material is matched to their supposed learning style.’

There has been quite a big movement at the moment around the idea of ‘embodied cognition’ over the last decade, spanning the realms of philosophy, cognitive science, psychology, the arts and so on – the literature is fairly impenetrable with considerable jargon (which differs in each field), and a faction that is seemingly rather unsubstantiated. The basis of embodied cognition seems to be the fact that our nervous system is spread throughout the body, and many of our most basic forms of ‘thinking’ require collaboration between our various body parts – as our bladder expands we feel it and then know what to do, if we hurt our finger we know to avoid the action that caused the pain. Indeed, the kinds of thoughts we are capable of having are almost certainly both limited by and enabled by our physical form.

Psychologists Sabrina Golonka and Andrew Wilson refer to cognition as ‘an extended system assembled from a broad array of resources’, saying that our behaviour ‘emerges from the real time interplay of task-specific resources distributed across the brain, body, and environment, coupled together via our perceptual systems’. They suggest four steps for considering embodiment in research, which could equally be applied to the design of tangible interfaces:

1. First characterise the task from the first-person perspective of the organism.

2. What task-relevant resources does the organism have access to? These could be in the brain, wider body, or environment.

3. How can these resources be assembled so as to solve the task?

4. Does the organism actually assemble and use the resources identified in step 3?

It seems fair to say that although our eyesight may be pretty great as earth-species go, neglecting all our other senses seems daft – it’s quite likely that combining the senses will give a more full picture of a concept and may enable different solutions. Tangible interfaces will still limit the cognition and the solutions made, but perhaps in different ways, or maybe less so than a screen-based interface.

There is some evidence from a museum-based trial in 2009 that a tangible user interface had advantages for collaboration over a comparable screen-based graphical interface:

‘Our results show that visitors found the tangible and the graphical systems equally easy to understand. However, with the tangible interface, visitors were significantly more likely to try the exhibit and significantly more likely to actively participate in groups. In turn, we show that regard ess of the condition, involving multiple active participants leads to significantly longer interaction times. Finally, we examine the role of children and adults in each condition and present evidence that children are more actively involved in the tangible condition, an effect that seems to be especially strong for girls.’

The study is rather flawed methodologically (low number of participants, poorly defined questions, and statistical approaches that don’t take account of co-variables) but nonetheless provides useful pilot data, with reasonably convincing data showing that more people participate simultaneously with the TUI as opposed to the GUI.

Moving a bit wider, it’s worth considering people who use physical movement as their main mode of expression and understanding – of these, dancers seem the most extreme, constructing images based on the feeling of the body. One interesting example comes from ‘Thinking with the body’, a collaboration between choreographer Wayne McGregor, cognitive scientist Phil Barnard, digital artist Nick Rothwell and others, where an attempt was made to create a system to generate images of movement based on words typed in to a computer. Nick says at the end of the process that:

‘sitting down at a desk (to use this system in the dance studio) broke the physical thinking and physical making in a way that was losing many of the benefits you were wanting to achieve’.

In another video from the same project, the benefits of physical movement are made clear – in this case for changing our perspectives on an issue:

‘Change where you are in relation to an image.

Shift where you are in relation to a sound (or shift the sound in relation to you)

Go ‘out of the picture’

Step inside of the object’

This change in perspective is something we often think about with computer games – which remain a serious medium allowing players to take on a different entity to see and explore the world from a new vantage point. This is a variation on the idea of Umwelt (in ethology) - the world as it is experienced by a particular organism - "the worlds they perceive, their Umwelten, are all different". We also use physical perspective changing in our workshop and prototyping approaches.

This blog-exploration of course suffers from being based on a leading question – if we looked for evidence that screens were better than tangible interfaces, I expect we’d also find the evidence lacking. There are some obvious benefits to screen-based interfaces – they’re cheap, relatively easy to make, the hardware is off-the-shelf, and there’s a consistency that means generally people will be able to figure them out quite quickly. However, the evidence that screen-based interfaces are rather limiting seems strong enough to warrant a thorough exploration of the design possibilities and potential applications of tangible interfaces.

Conclusions drawn

- Tangible interfaces probably make it easier for more people to be involved in a collaborative effort – typically a screen faces one way, a keyboard and mouse are used by one person – multiple screens, entire rooms of screens etc. are of course all possible, but tangible interfaces will expand the possibilities.

- Tangible interfaces allow the user physically change perspective, and from there it might be easier to change perspective mentally. Moving around an interface in three dimensions, being able to move physical objects and then look again from multiple angles could lend itself to all sorts of interesting applications.

- There are interesting opportunities for making the connection between something you change and the result quite transparent. This is not unique to tangible interfaces but they do exand the possible ways of doing making these connections more clear.

- I’m not convinced yet that we can say tangible interfaces make people learn better, but I think it’s fair to say that if we envoke more senses, more people will be attracted to a system and it should be a more interesting experience. This poses clear benefits if the purpose of the tangible interface is to explore a problem and come up with a solution. The more people involved in a problem, and the greater diversity of those people, the more likely we are to reach a better solution/outcome.

- We can learn something from the psychology embodiment literature when we design tangible interfaces - particularly by thinking more deeply about the task-relevant resources available to a human, in the brain, body or environment, and how these can be assembled to solve a particular task.