So far in the ‘play’ stage of the project, I have rendered wonderful little sections of Milton Keynes in Fluxus with both practical and slightly more esoteric outcomes – firstly, we have a script that outputs .OBJ files using small sections of data, which can be used for both digital and tangible modelling (such as 3D printing). That’s the practical part. The latter made the primitive object performance ready, which I explored during livecoding events in Leeds and Newcastle by animating it – however the long rendering times made this difficult, and something that must be fine tuned in the future.

It made total and complete sense to use the data to build areas in Minecraft on the Raspberry Pi. Minecraft Pi has a pretty stellar Python API – I’d encountered it during workshops with teenagers, but never sat down to explore it properly. The majority of the GeoTIFF data extraction for previous play used Python, so porting it to Minecraft wouldn’t be difficult. I was quite curious to see how far we could take this, and after testing, the intrigue has grown deeper, due to its limitations (which I tend to have found unearths a whole new category of investigation & option).

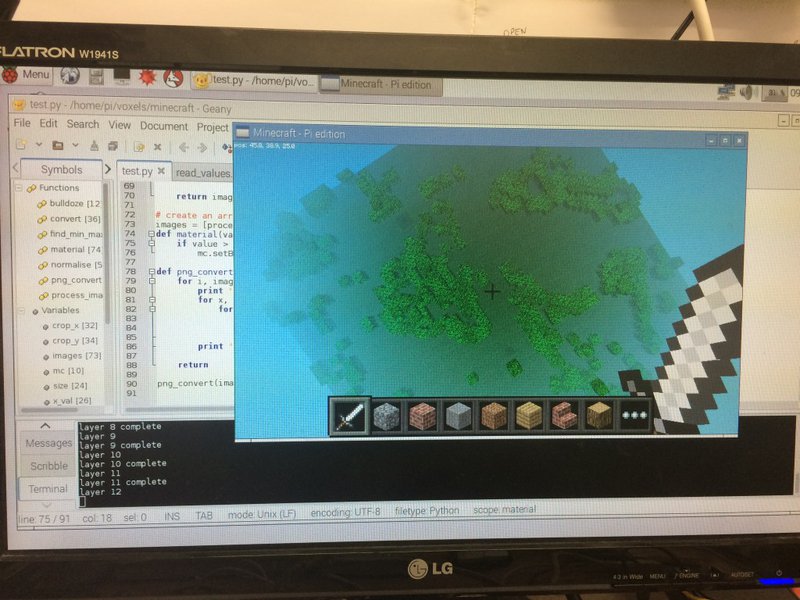

Apologies for potato picture, Minecraft didn’t take too kindly to being screenshotted. First experiment was to simply load in the data and see what monster arose – any voxel that was deemed to be more dense than 0.2 (between 0 and 1) was set as a 1x1x1 grass block. Initially, any value of this was to be represented as an air block, but later found that skipping that block entirely was quicker. It was fairly simple to port my GeoTIFF>PNG script over, and write blocks to Minecraft as opposed to pixels as a new PIL image. The result was fairly sparse, but represented the general gist of the greenspace of that area, and zooming out, there were striking resemblances to the GeoTIFFs.

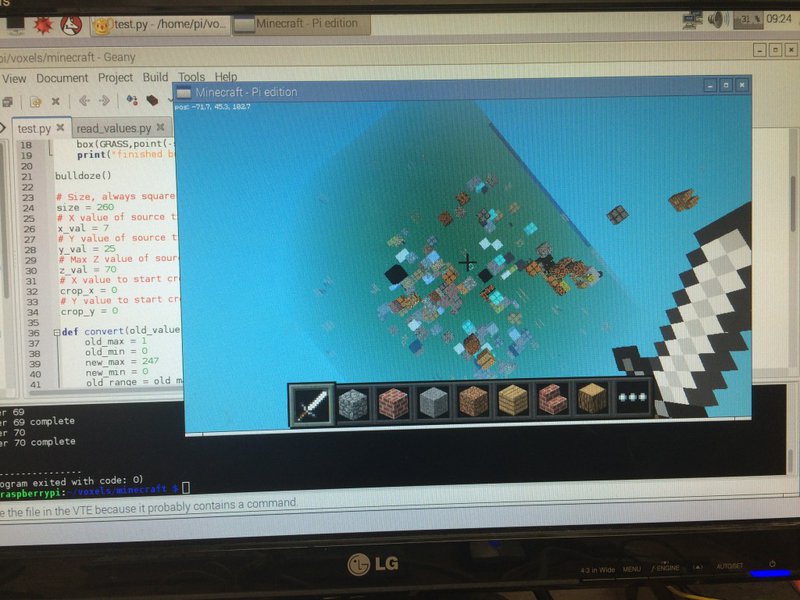

Now, I wanted to play with the block textures, to give some sort of indication to the voxel density that it should represent. As an initial test, I converted the voxel density range to the range of textures available within the Minecraft API, so that each block would be written with a corresponding texture. Unfortunately, as can be seen above, that didn’t work great, and caused some data problems. I’m guessing that some textures aren’t available to be blindly written to blocks.

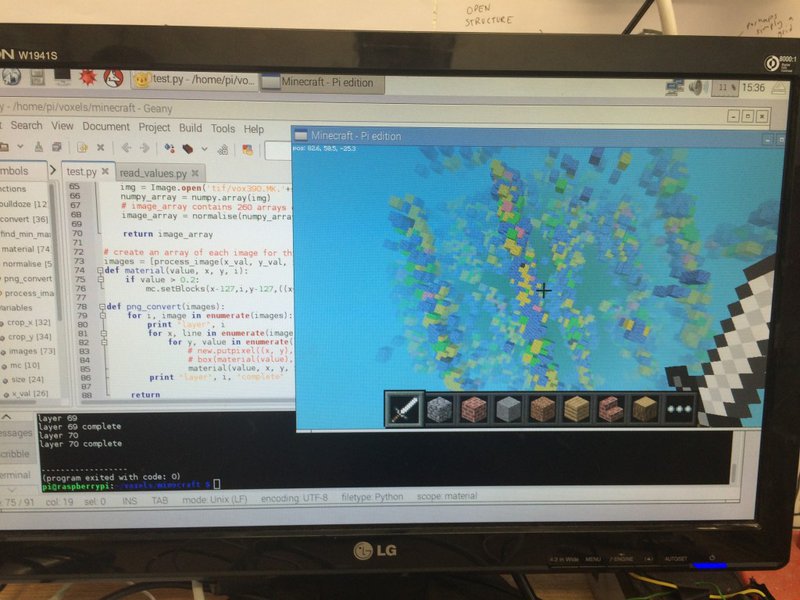

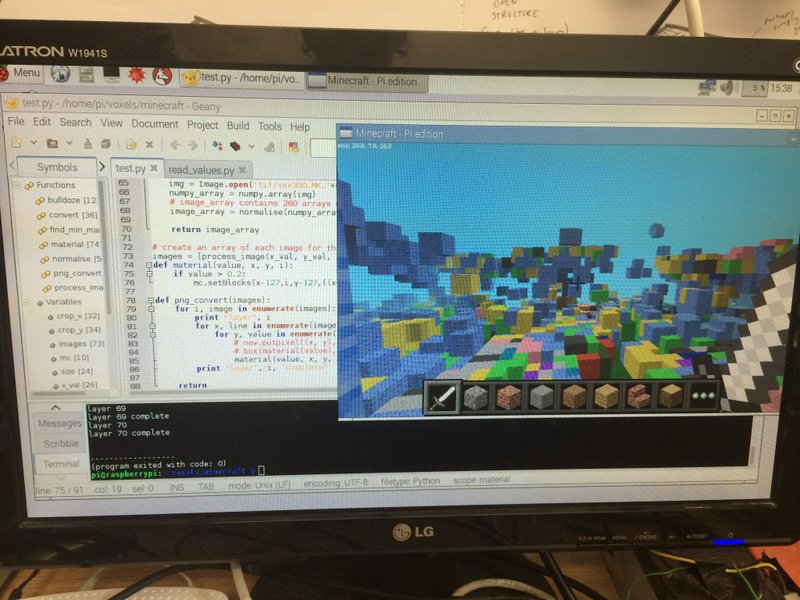

This is a second attempt, by accessing the attributes of a given texture. A few textures, such as Wool and Wood, have secondary attributes – in the case of Wool, this is a colour range between 0 and 15. Using Wool as the texture, I mapped the voxel density value to a colour index, producing the above. Just by glancing, it is possible to see some colour patterns emerging, such as purple and yellow. However in order to understand the values, the texture attribute API must be referenced, as there don’t seem to be continuity in the order of the colours. However, this opens the door to further experimentation along this thread.

I guess this harks back to the limitations I mentioned earlier. In the case of Minecraft Pi, the current limitations seem to be the world size (255 cubed) and the materiality within it. The tile area of Milton Keynes I am writing in is 260 cubed, the tile itself being a tiny representation of the city. Sure, the GeoTIFF could be scaled down, but there is the risk of losing valuable data. Although we are still toying with the idea of future forms, this is something we could bear in mind – for now, I am thinking to base exploration on this specific area of Milton Keynes, and branching out from there. In terms of materiality, the constraints of such actually inspires creativity (there you go, I said it) in resourceful and perhaps hacky ways. For example, we could create our own textures with varying opacity, the data and area could inform the colours in some way. And what properties would these blocks have? etc.

Yesterday was a productive day for the project. Jo is working on it with us, bringing her expertise of architecture, basketry and general tangible excellence to really make this project. She is also fantastic at organising (thank you, Jo), and we have a plan of how the next 18 weeks will pan out. We were both excited to learn that we will shortly have access to the flight path data of Milton Keynes’ birds, as well as bird species activity information within these specific locations. In the coming weeks, Jo is going to play with the .OBJ files generated by Fluxus, and begin experimenting with modelling them. Although digital modelling can be considered a step prior to tangible modelling, I’m thinking that the material forms will also inform the digital. Basically, an exciting time!